Meta has released its Community Standards Enforcement Report (Q2 2025) along with its Widely Viewed Content Report, offering insights into moderation actions and the type of content gaining visibility on Facebook.

While no major shifts are visible, the data highlights how Meta’s new enforcement approach—designed to reduce moderation errors and expand free expression—has impacted harmful content detection, fake accounts, and external link visibility.

Meta’s Shift Toward Fewer Enforcement Mistakes

In its introduction, Meta emphasized progress in reducing over-enforcement:

“Since we began our efforts to reduce over-enforcement, we’ve cut enforcement mistakes in the U.S. by more than 75% on a weekly basis.”

This change aligns with the company’s January announcement to “allow more speech” while lowering mistakes. While fewer false positives are positive for users, weaker thresholds can also mean more harmful content slipping through.

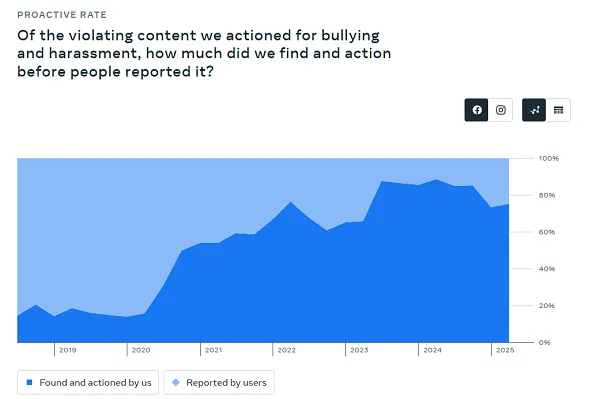

Decline in Enforcement: Bullying, Hate Speech, and Terror Content

The Q2 2025 data shows noticeable drops in enforcement for several sensitive categories:

- Bullying and Harassment: Reports decreased sharply, with Meta detecting less harmful behavior before users reported it.

- Dangerous Organizations: Lower moderation of terror- and hate-related content.

- Hateful Content: Reduced enforcement compared to previous quarters.

These trends suggest that fewer mistakes come at the cost of more harmful content reaching users.

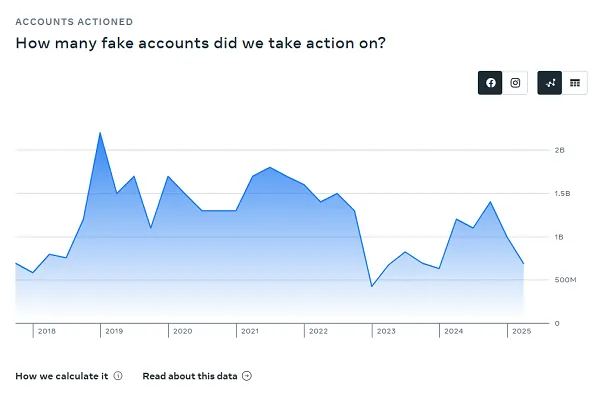

Fake Accounts: Still 4% of Facebook Users

Meta estimates that 4% of monthly active users are fake accounts, slightly improved from the long-standing 5% figure but worse than the 3% reported in Q1. While detection tools are evolving, fake accounts remain a persistent challenge.

Content Trends: Nudity, Sexual Activity, and Links

- Nudity and Sexual Activity: Increases in enforcement are tied to improved measurement, not necessarily higher activity.

- External Links: Only 2.2% of Facebook views in the U.S. included links to outside sources (down from 2.7% in Q1). Most shares come from Pages already followed by users.

This means Facebook traffic is becoming even less valuable for publishers and referral strategies, as link-based content continues to lose reach.

Widely Viewed Content in Q2 2025

The most viral Facebook posts mixed news updates and tabloid-style stories, including:

- Rumors about Pope Francis’ death

- A fight at a Chuck E. Cheese restaurant

- Rappers promising grills for a local football team

- A Texas woman dying from a “brain-eating amoeba”

- Emma Stone avoiding a bee attack at a red-carpet event

This reflects Facebook’s ongoing trend: a blend of serious news and viral oddities, with little space for external media links.

Oversight Board’s Role in Policy

Meta also published the Oversight Board’s 2024 Annual Report, noting that:

- Since 2021, the Board issued 300+ recommendations

- 74% have been adopted or progressed, improving transparency, fairness, and human rights considerations

Despite this progress, Meta’s broader direction will remain focused on expanding speech freedoms, in line with U.S. government expectations, for at least the next three years.

Key Takeaways for Digital Marketers

- Reduced Enforcement = Higher Risk: More harmful content may circulate despite fewer mistakes.

- Declining Link Visibility: Facebook referral traffic continues to shrink, limiting its role for publishers.

- Fake Accounts Persist: With 4% of MAUs still fake, engagement metrics may be inflated.

- Content Trends: Viral oddities and human-interest stories dominate Facebook’s algorithm.

For businesses and publishers, this underlines the need to diversify traffic sources and not rely solely on Facebook for reach.

FAQ – Meta Q2 2025 Enforcement and Content Trends

What is Meta’s Community Standards Enforcement Report?

It’s a quarterly report where Meta shares data on how it moderates harmful content, including bullying, hate speech, fake accounts, and more.

What did Meta change in its enforcement in 2025?

Meta shifted toward reducing “over-enforcement,” which cut moderation mistakes by 75% in the U.S. but also lowered proactive detection of harmful content.

How many fake accounts are on Facebook in Q2 2025?

Meta estimates that around 4% of monthly active users are fake accounts, a slight improvement from previous years but worse than Q1 2025.

Do Facebook posts with external links still perform well?

No. Only 2.2% of U.S. views in Q2 2025 included an external link, meaning referral traffic from Facebook is shrinking.

What type of content was most viewed on Facebook in Q2 2025?

The most viral posts included tabloid-style stories (celebrity incidents, unusual news) and some topical updates, rather than professional media or publisher content.

How does the Oversight Board influence Meta’s policies?

Since 2021, the Board has issued 300+ recommendations, 74% of which Meta has implemented or made progress on, improving transparency and fairness.